Hi, I am Max!

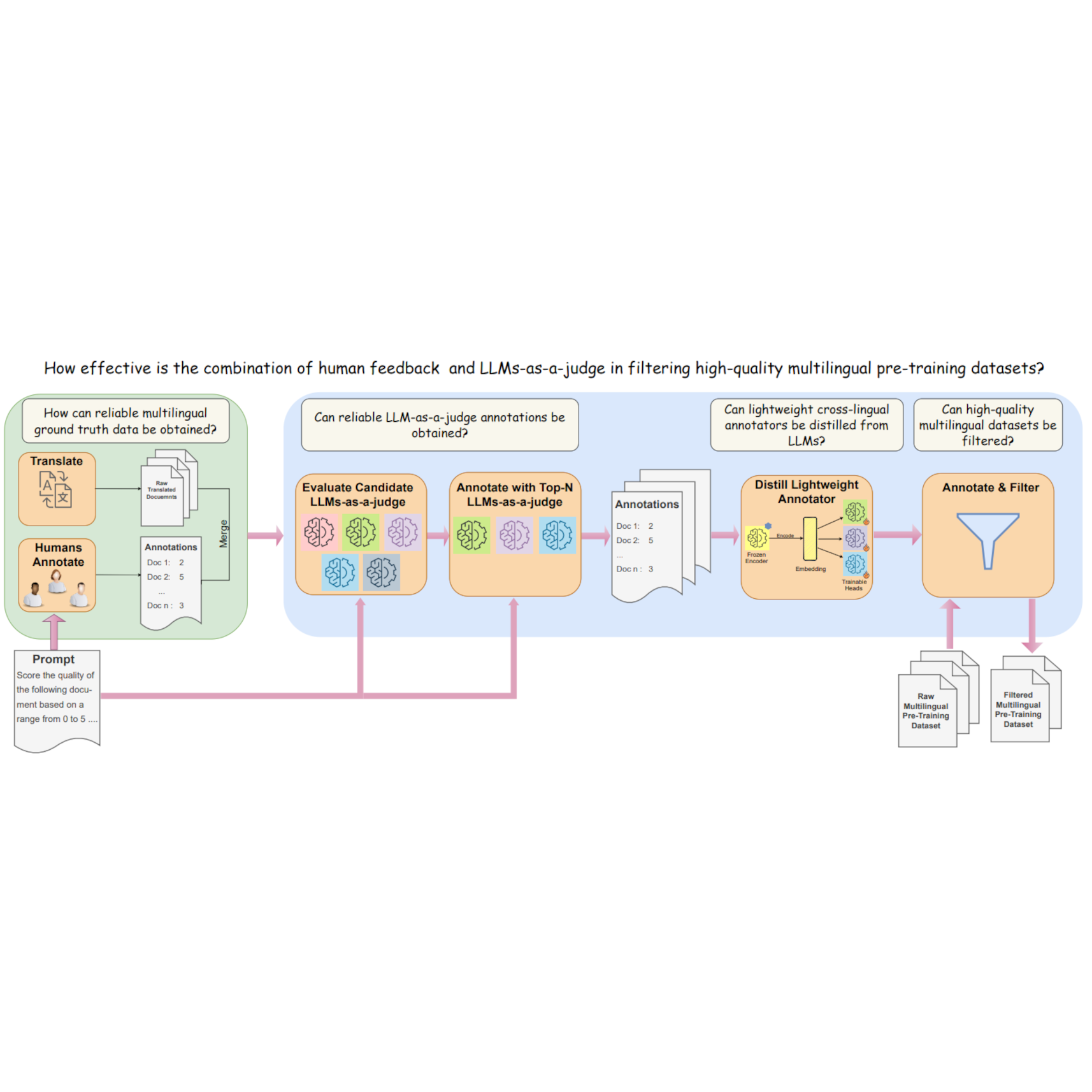

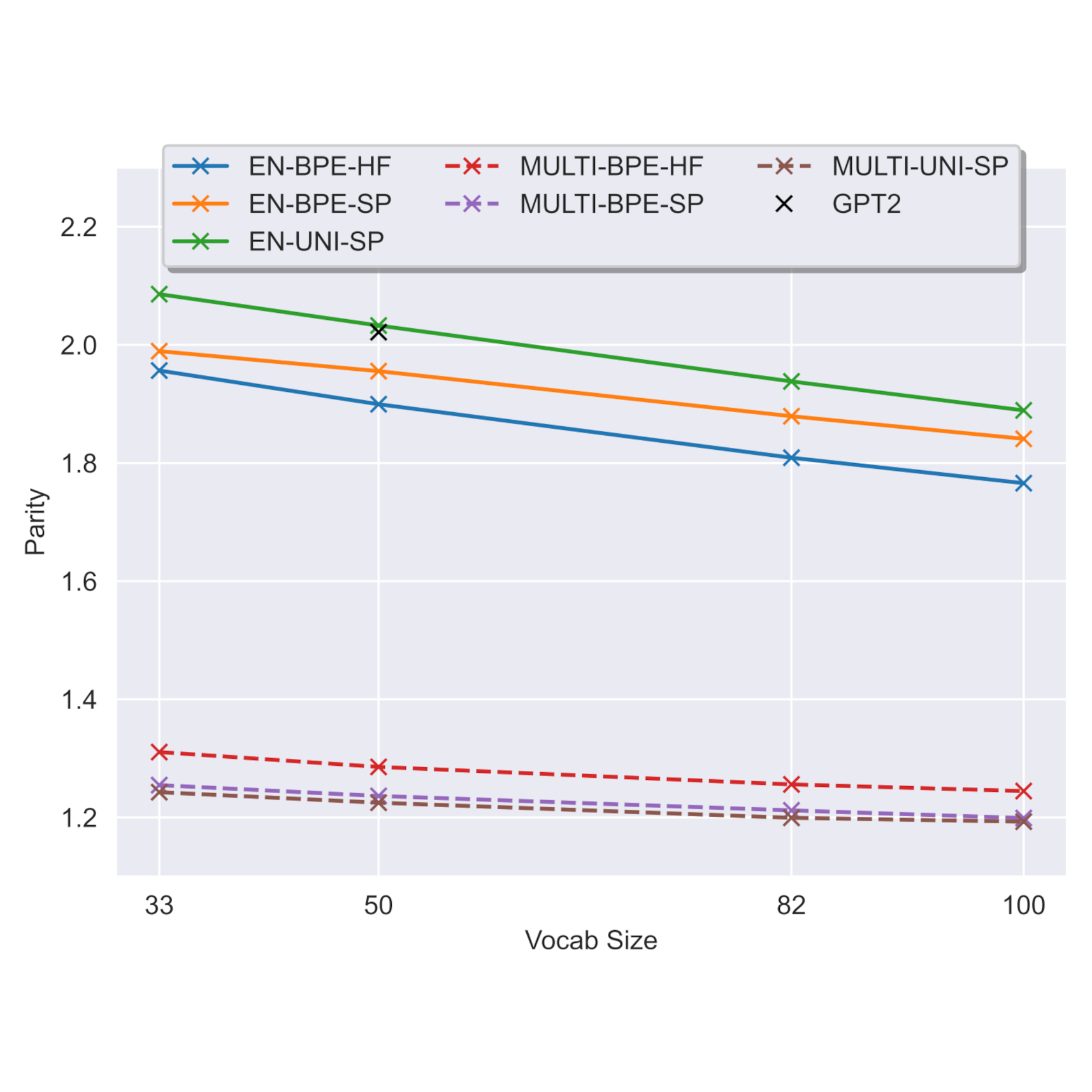

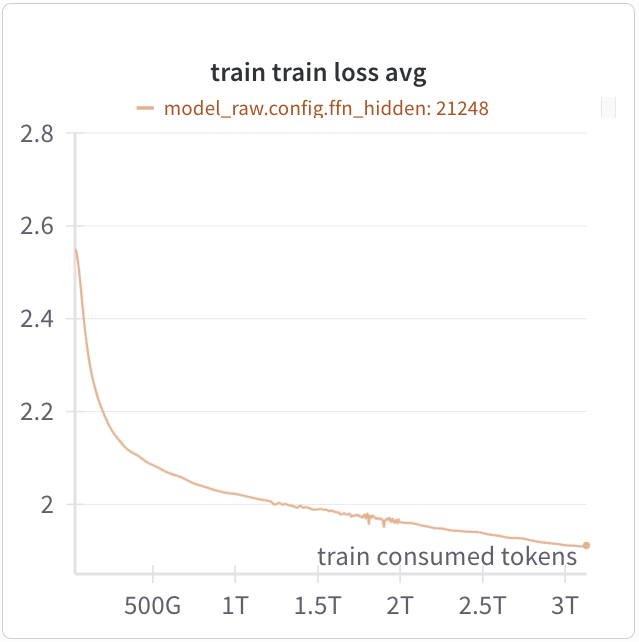

I’m a Machine Learning Researcher at Fraunhofer IAIS, focused on large-scale pretraining of multilingual large language models (LLMs). I currently work on the Eurolingua project, where we're developing a new family of European, multilingual LLMs.

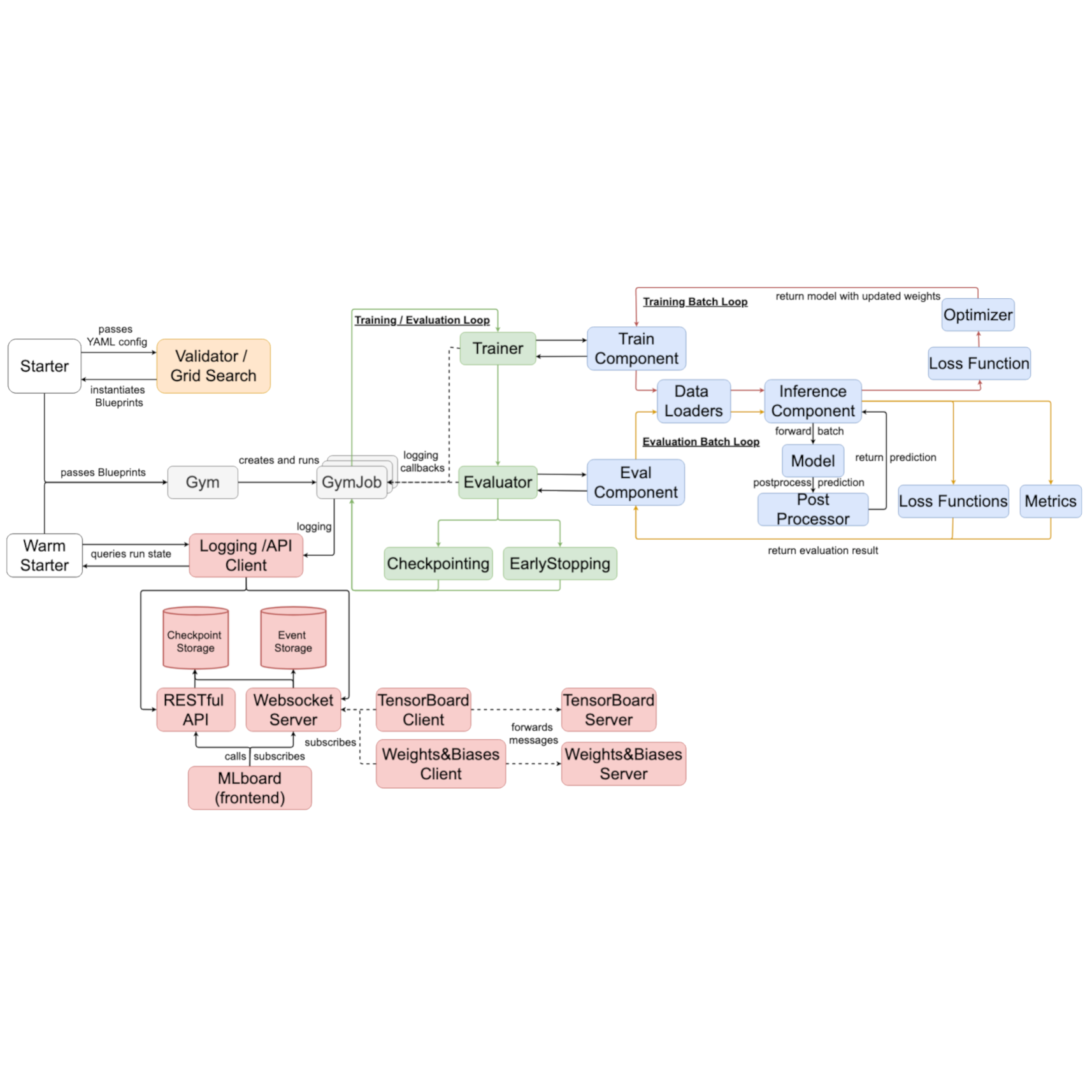

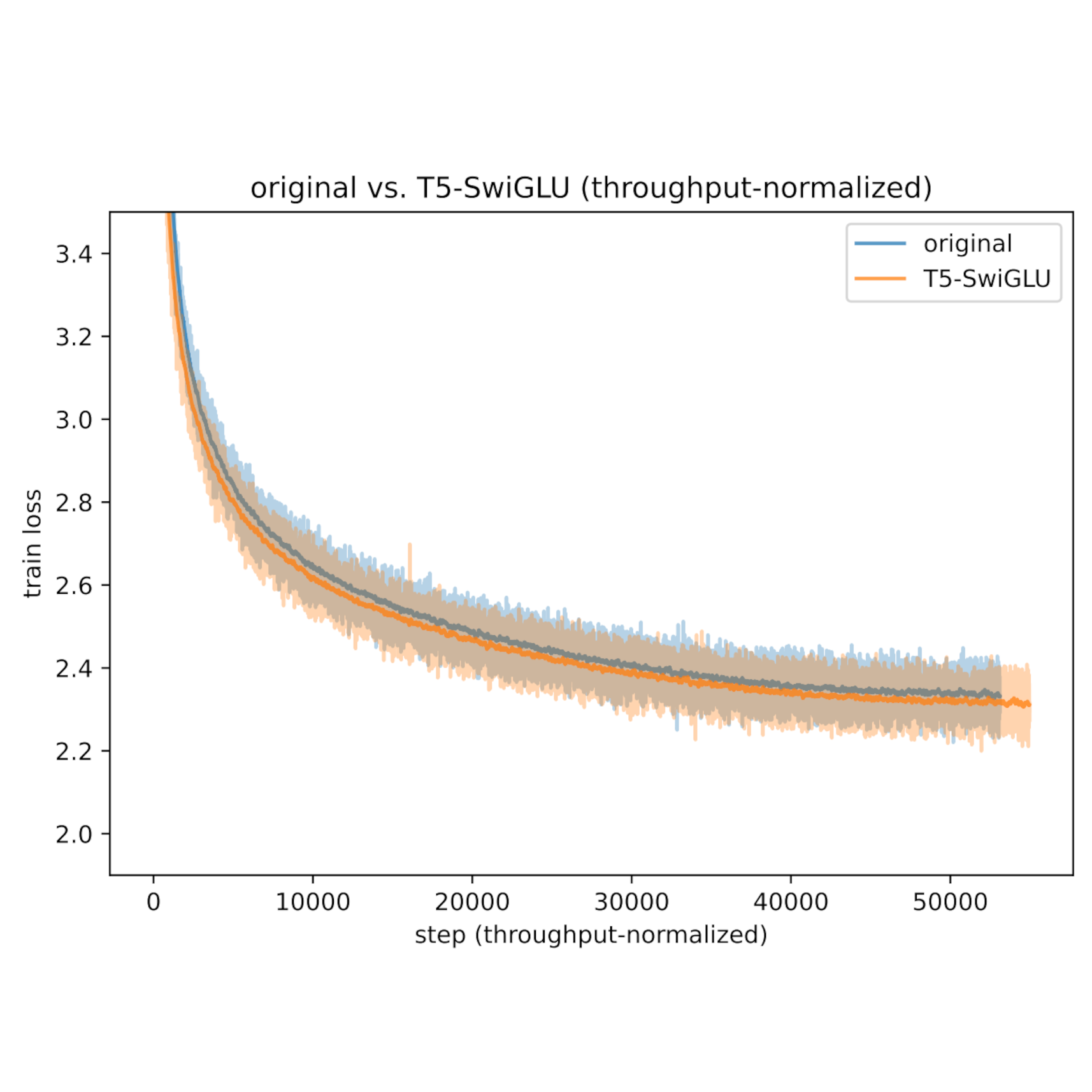

I’m the creator and lead developer of Modalities, an open-source training framework designed for efficient and reproducible LLM pretraining at scale. Initially a focused engineering effort, Modalities now supports distributed training across thousands of GPUs, handles billion-parameter models with state-of-the-art techniques such as FSDP, Tensor Parallelism and Activation Checkpointing. These days, Modalities powers all Eurolingua pretrainings on Europe's largest HPC clusters.

Earlier, I completed my PhD at the University of Bonn, where I worked on uncertainty estimation (i.e., teaching neural networks awareness for what they don’t know). Before that, I received a Master’s in Computer Science (Intelligence Engineering) from Hamburg University of Technology.